The State of Agents Today

By Ravish Ailinani and Siddharth Pratahkal

In its simplest terms, an AI agent is a software system that pursues goals and takes actions autonomously on a user's behalf. Unlike traditional automated scripts or bots that follow predefined instructions, agentic AI systems dynamically solve problems by observing their environment, reasoning about what to do, using various tools, deciding on a course of action, and taking real-time feedback before iterating - all with minimal human intervention.

Early forms of automation were typically limited to rigid sequences such as rule-based if-this-then-that workflows or robotic process automation in enterprise software. Several characteristics distinguish agentic AI from an AI app or assistant:

- Agents operate with a degree of autonomy. They don't require the user to prompt every action. They can plan multi-step solutions, adapt to new information, and recover from errors in real-time.

- They’re goal-directed and proactive, able to figure out the next step towards a goal without explicit instructions at each turn.

For example, a generative agent might not only draft an email in a scheduler but also decide to look up the recipient’s timezone and add a virtual meeting via an API before sending, if the goal is to schedule a meeting. In essence, agents marry the prediction capabilities of AI models with the adaptability of software, creating a new paradigm of AI-driven automation.

Key components of the modern agent ecosystem

As AI agents rise in prominence, a supporting agent stack or ecosystem has emerged to enable their capabilities. Much like how web applications needed databases and web servers, AI agents rely on an infrastructure stack tailored to their needs. Three key layers or components have come into focus:

- The model (agent brain): Foundation models (GPT-5, Claude Sonnet 4.5, Gemini 2.5 Pro, etc.) supply reasoning. The model interprets instructions, reasons through tasks (chain-of-thought, ReAct, etc.), considers feedback, iterates, and generates actions/plans.

- Tools and external interfaces: Agents extend their reach via tools and APIs. These connect an agent’s cognitive core to the outside world: whether retrieving information, invoking APIs, or controlling a browser.

- Orchestration (agent runtime): Wrapping around the model and tools is the orchestration layer, the agent’s runtime logic that manages context, memory, and decision flow. This includes the agent’s short-term memory (tracking the ongoing conversation or task state) and long-term memory (retrieving relevant facts from a knowledge base). It also includes the planning/reasoning frameworks that structure the agent’s actions (for instance, an agent may explicitly alternate between thinking and acting steps, as in the ReAct loop). Fine-tuning and RAG inject proprietary data.

The rise of agent protocols: MCP, A2A, AG-UI, and many more…

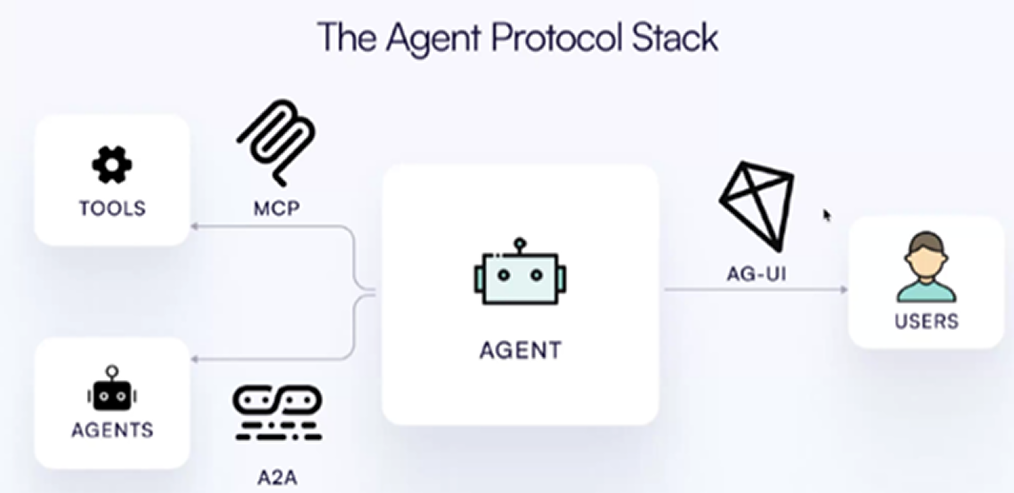

Just like how traditional software has a standardized set of protocols that help streamline a myriad of dependencies, a set of open protocols to standardize how agents interact with data, tools, and each other is emerging. Anthropic’s Model Context Protocol (MCP), introduced in late 2024, is an example of a common framework (currently, the most popular) for agents to interact with tools. Essentially serving as a “USB-C port” for AI models to access diverse data and tools, MCP streamlines the backend complexity of integrating different formats of fetched data from different applications.

In April 2025, Google took this concept a step further with Agent-to-Agent (A2A), an open protocol for cross-agent collaboration. A2A allows AI agents to directly communicate, securely exchange information, and coordinate actions regardless of which vendor or framework they were built on. A2A introduces a way for agents to discover each other’s capabilities via machine-readable “agent cards.”

AG-UI is another fast-growing protocol that connects agents to users via the frontend. Its key features include event-driven communication (standardized stream of JSON events over HTTP or SSE), dynamic UI generation and real-time synchronization (bidirectional between user frontend and agent’s backend). AG-UI was created to solve the "last mile" problem of AI agent development, transforming agents into collaborative, user-centric tools.

In effect, AG-UI (agent-to-frontend), A2A (agent-to-agent), and MCP (agent-to-tools) form a complementary protocol stack for building modern AI agent systems, as highlighted in the diagram below.

It’s still early days for these standards with rapid developments every other week/month. It remains to be seen whether all these protocols (and other standardization initiatives like OASF, AGNTCY, etc.) converge on one protocol (like the IEEE 802.11 “WiFi” standards) or run parallel tracks (like different countries’ plug sockets).

Orchestration and multi-agent collaboration

As individual agents become more capable, we see scenarios where multiple agents work together, effectively forming agent teams. Complex tasks might be broken down among specialized agents or one agent might supervise/evaluate another. For example, one agent could be an LLM-based auto-rater that critiques or scores the output of another agent, creating a feedback loop to improve quality. Such architectures introduce new possibilities: an agent could spawn helper sub-agents for subtasks, or two agents with different skills could negotiate to solve a problem.

However, multi-agent systems also add complexity, which is where orchestration frameworks come in. Emerging platforms like LangChain, LangGraph, CrewAI, and others provide structures to coordinate these interactions (managing messaging between agents, handling concurrency, etc.). Orchestration also involves maintaining a shared state or memory from which all agents can draw. Meanwhile, research on agent reasoning continues to evolve techniques like self-reflection and tree-of-thought search to make agents more reliable.

For now, many multi-agent setups remain experimental (not yet widely deployed in production), but the trajectory is clear: agentic AI is moving towards higher-level cognition. Just as human organizations rely on teams with diverse roles, future AI solutions might involve a constellation of agents (some experts in certain domains, others orchestrators or validators) working in concert. Effective orchestration, including observability and security, will be the key to harnessing their collective intelligence without chaos.

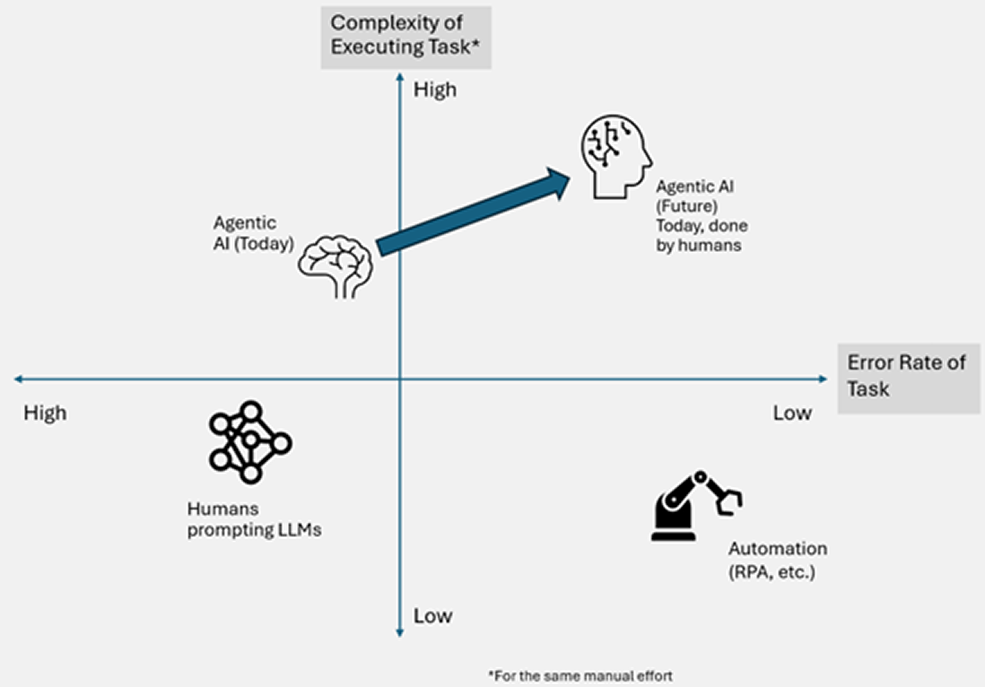

Mapping agent capabilities: complexity vs. tolerance matrix

To better understand where AI agents stand today (and where they are headed), it’s useful to map them along two dimensions: the complexity of tasks they can handle, and the tolerance for errors in those tasks. The diagram below presents a conceptual 2X2 matrix of these dimensions:

This evolution of AI from today’s state towards much-higher-complexity/low-error-tolerance work has profound implications for the labor market. As agentic AI crosses into the upper-right quadrant, handling complex tasks with near-deterministic accuracy, its impact on the workforce is accelerating. Research from the Gerald Huff Fund and NSF projects that AI will disrupt approximately 25% of all jobs over the next three years. Wholesale Trade, Retail Trade, Finance and Insurance, Educational Services, and Real Estate will face the highest displacement rates –precisely because these sectors rely heavily on technological skills AI excels at performing. Federal Reserve analysis reinforces this pattern: occupations that adopted generative AI most intensively (like computer and mathematical roles) saw the largest increase in unemployment, while blue-collar and personal service jobs with limited AI applicability experienced relatively smaller impacts.

Emerging opportunities and ecosystem players

Every major AI player is investing in agentic capabilities, ensuring their platforms can both host and leverage AI agents. Funding for AI startups has been the highest ever, with global Q1 ’25 reaching an astronomical ~$62B, followed by Q3 at $45B, in contrast to $23B in Q3 ’24. Analysts estimate that the Agentic AI market will soar from ~$7B in 2025 to over $50B in 2030. This frenzy of activity reflects a shared belief that agentic AI could be as transformational as the rise of mobile or cloud apps.

Broadly, new ventures in the agent space fall into a few categories:

- Infrastructure & enabling tools: Companies building memory stores, orchestration frameworks, and safety layers to make agents more reliable (for example, startups offering different kinds of memory storage for long-term agent memory, or browsers and APIs specialized for agent automation.)

- Horizontal agent platforms: General-purpose AI assistants for common business functions across industries. These agents handle tasks like marketing content generation, customer support automation, or coding assistance, and can be adapted to many enterprise settings.

- Vertical agents: Domain-specific AI solutions tailored to particular industries or workflows. Finance and insurance are especially active areas, with agents for investment research or automated claims processing, while healthcare-focused agents target use cases like medical coding and patient triage. Industry analysts predict the vertical AI market will surge as specialized agents deliver concrete ROI by automating entire job workflows.

Agentic AI is early yet feels inevitable. From an investor’s perspective, opportunities exist at each layer of this ecosystem. Horizontal agent platforms may achieve scale by becoming the go-to AI coworkers for broad enterprise use. Meanwhile, a successful vertical agent in a high-value domain might capture a SaaS-like market share, with an even more disruptive impact by directly taking over labor-intensive processes, meaningfully increasing the TAM of opportunity.

Building the “picks and shovels” that all agents rely on could yield enduring value if agent adoption continues to grow. There’s still much work to do in reliability, safety, and integration. Winners will either own an irreplaceable workflow with proprietary data or facilitate the infrastructure every agent stack needs. As the ecosystem shakes out, we will likely see some consolidation, but for now, the agent gold rush shows no sign of slowing.

References

- What is an AI agent?

- Agents

- Agents Companion

- Introducing the Model Context Protocol

- Announcing the Agent2Agent Protocol (A2A)

- AG-UI

- The Agent Stack Emerges

- Impact of AI on workers in the United States

- Is AI Contributing to Rising Unemployment? Evidence from Occupational Variation

- Q3 Venture Funding Jumps 38% As More Massive Rounds Go To AI Giants And Exits Gain Steam

- AI Agents Market Size, Share, Growth & Latest Trends

- AI Agents Research Report 2024-2029: Market to Grow by $42 Billion, Driven by Demand for Hyper-Personalized Digital Experiences and Expansion of AI-Powered SaaS Platforms

Success stories

Stay in the loop.

Subscribe to our newsletter.

DVC is a team of passionate entrepreneurs on a mission to transform the startup journey.

.webp)